Feb 18, 2024

OpenAI Unveils Text-To-Video Tool Sora Transcript

OpenAI unveiled a new tool called Sora, that turns text into video. Read the transcript here.

Transcribe Your Own Content

Try Rev and save time transcribing, captioning, and subtitling.

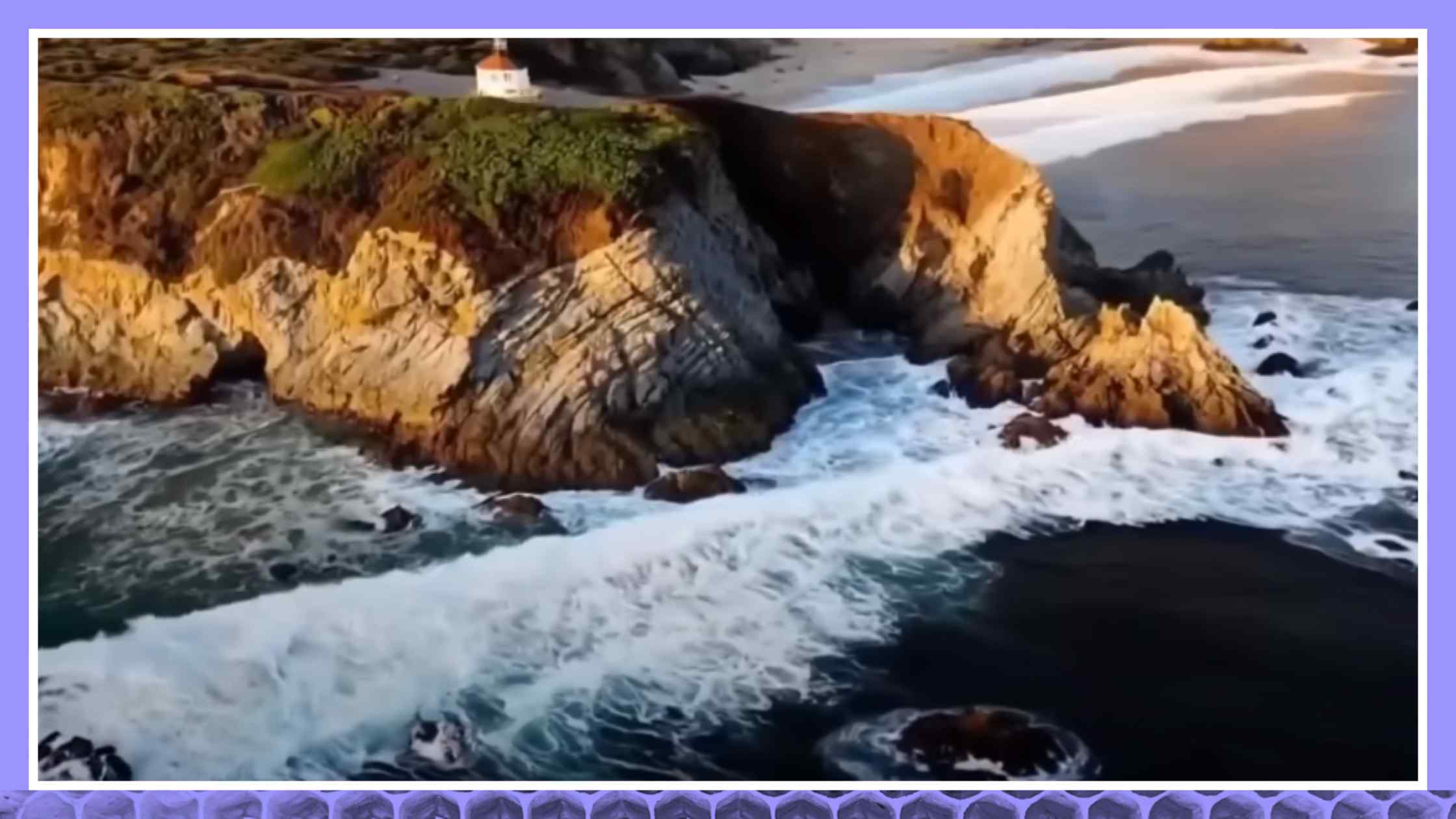

Tom (00:00):

It is a beautiful drone shot, the kind of video that you might see in a travel video, right? Except it’s not real. There is no drone. There is no camera. You can’t travel because the video was generated by AI.

(00:14)

It’s from a new tool just announced a few hours ago by open AI called Sora. All it takes is typing in a short text, a prompt, and in minutes it spits out a 60-second video clip of pretty much anything you can imagine. Brian Cheung is here to break this all down for us. This is cool, Brian. I mean, it could mean millions of people though, could be out of work? Because who did all this video and what kind of an impact might it have on the jobs market?

Brian Cheung (00:45):

Yeah, I mean, it’s really mind-blowing. Some other video that we saw was of a wooly mammoth in a tundra. We also saw a little monster lighting up a candle, and none of it was done by any animators. All you have to do is enter in a text prompt, you hit generate, and it creates what you see in front of you.

(01:00)

Again, nothing was shot in this case. It’s all AI generated. And obviously this is of concern in Hollywood where you have animators, illustrators, visual effects workers who are wondering, how is this going to affect my job? And we have estimates from trade organizations and unions that have tried to project the impact of AI.

(01:18)

21% of US film, TV and animation jobs predicted to be partially or wholly replaced by generative AI by just 2026, Tom. This is already happening. And I have to also point out this tech is from the makers of ChatGPT, so very much a big technological jump for that company.

Tom (01:35):

But scary. I mean, Brian, what if I typed in right now I want Brian Cheung to tell me about the stock markets, and then I want him to walk down the street and buy a Starbucks?

Brian Cheung (01:42):

You wouldn’t need me.

Tom (01:43):

Could it do that?

Brian Cheung (01:44):

It might, and you wouldn’t need to do me. But here’s the thing. There are some things inside the announcement today that came from open AI with regards to the way that they would protect you, for example, using my likeness.

(01:54)

“Usage policies that they already have in place,” the company says, “will reject violent or hateful images, sexual content, celebrity likenesses, IP of others.” They also say that they’re engaging policymakers, educators, and artists as they develop and test this technology. Which by the way, OpenAI tells me they don’t plan on releasing to the public anytime soon.

(02:14)

But regardless, even if they say that, what we’ve seen is that in practice it’s hard to stop. The issues with the deepfake porn, with example, for example, with Taylor Swift’s likeness just illustrates how difficult it is to police these things. With video, it’s very much an issue.

Tom (02:29):

Yeah. And you got to worry about the impact on elections. We’re already seeing AI used in fake election campaigns. You got to worry if it’s going to get this sophisticated, you can’t tell the difference, where is this headed? I got 10 seconds for you.

Brian Cheung (02:40):

Yeah. And that’s the thing where they’re trying to consult, again, policy makers and industry groups. But what that yields in the long run, it remains to be seen. But again, not yet released to the public, but at some point, it very much could be very prevalent in the entertainment industry and beyond, Tom.

Transcribe Your Own Content

Try Rev and save time transcribing, captioning, and subtitling.