What is WER? What Does Word Error Rate Mean?

Automatic speech recognition (ASR) technology uses machines and software to identify and process spoken language. It can also be used to authenticate a person’s identity by their voice. This technology has advanced significantly in recent years, but does not always yield perfect results.

In the process of recognizing speech and translating it into text form, some words may be left out or mistranslated. If you have used ASR in some capacity, you probably have encountered the phrase “word error rate” (WER).

Let’s take a look at the idea of WER, how to calculate it, and why it matters.

How do you calculate word error rate?

It may seem like a complicated idea, but the method for calculating basic WER is actually pretty simple. Basically, WER is the number of errors divided by the total words.

To get the WER, start by adding up the substitutions, insertions, and deletions that occur in a sequence of recognized words. Divide that number by the total number of words originally spoken. The result is the WER.

To put it in a simple formula, Word Error Rate = (Substitutions + Insertions + Deletions) / Number of Words Spoken

But how do you add up those factors? Let’s look at each one:

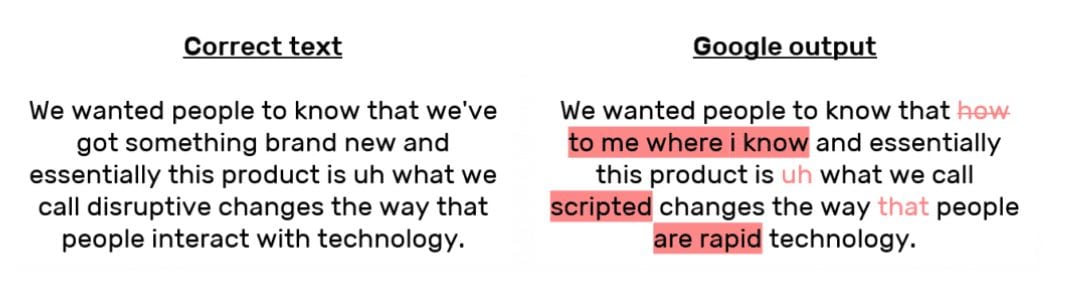

- A substitution occurs when a word gets replaced (for example, “noose” is transcribed as “moose”)

- An insertion is when a word is added that wasn’t said (for example, “SAT” becomes “essay tea”)

- A deletion happens when a word is left out of the transcript completely (for example, “turn it around” becomes “turn around”)

Let’s say that a person speaks 29 total words in an original transcription file. Among those words spoken, the transcription included 11 substitutions, insertions, and deletions.

To get the WER for that transcription, you would divide 11 by 29 to get 0.379. That rounds up to .38, making the WER 38 percent.

Where did the word error rate calculation come from?

The WER calculation is based on a measurement called the “Levenshtein distance.” The Levenshtein distance is a measurement of the differences between two “strings.” In this case, the strings are sequences of letters that make up the words in a transcription.

Let’s look at the error examples we used earlier: “noose” and “moose.” Since just a single letter is changed, the Levenshtein distance is only 1. The Levenshtein distance is more than four times as much for “SAT” and “essay tea,” since in transcription “SA” becomes “essay” by adding 3, and “T” becomes “tea” by adding 2.

Why does word error rate matter?

WER is an important, common metric used to measure the performance of the speech recognition APIs used to power interactive voice-based technology, like Siri or the Amazon Echo.

Lower WER often indicates that the ASR software is more accurate in recognizing speech. A higher WER, then, often indicates lower ASR accuracy.

Scientists, developers, and others who use ASR technology may consider WER when choosing a product for a specific purpose. ASR developers may also calculate and track WER over time to measure how their software has improved.

WER can also be used at the consumer level, to help when choosing an automatic transcription service or ASR app.

Is word error rate a good way to measure accuracy?

As we’ve seen, WER can be very important for choosing a transcription or ASR service. However, it is not the only factor you should use when deciding how accurate a service or software may be. Here are a couple reasons why:

Source of errors

WER does not account for the reason why errors may happen. Factors that can affect WER, without necessarily reflecting the capabilities of the ASR technology itself, include:

- Recording quality

- Microphone quality

- Speaker pronunciation

- Background noise

- Unusual names, locations, and other proper nouns

- Technical or industry-specific terms

Human interpretation

Depending on how the ASR software is used, errors may not significantly affect usability. If a human user can read a transcript full of errors and still understand the speaker’s original meaning, then even a high WER does not interfere with the ASR’s usefulness.

Word error rate is an important calculation to make when it comes to using speech recognition technology, but it is important to consider other factors and context.

How does Rev measure up?

Rev beat out the competition when it comes to Word Error Rate and accuracy. Download our State of ASR Report to see the numbers.

If you have other questions about WER, Rev’s speech-to-text API, or our human transcription services on Rev, feel free to contact us at any time. You can also easily get started now with our automatic transcription services.

Rev also has developed tools so you can calculate word error rates yourself.

Subscribe to The Rev Blog

Sign up to get Rev content delivered straight to your inbox.