The History of Closed Captioning: The Analog Era to Today

Join Rev for a quick tour of the history of closed captions—exploring their evolution and how they became so important today.

Closed captions are so common that we often take them for granted — but that hasn’t always been the case. So here’s a short tour of the evolution of closed captions and how they became so important. First, though, let’s dispel a few common misconceptions.

Closed Captions: What They Are (And Aren’t)

Not even Netflix completely differentiates captions vs. subtitles. So it’s no surprise that most people think they’re the same thing. On one hand, both subtitles and captions do share a history: they are both versions of Audiovisual Translation (ATV). But there are a few key differences between them.

Subtitles today are translations for audiences that don’t speak the video’s original language. They only include dialog and do not meet accessibility requirements for hearing disabilities. They are also usually created before the video’s release.

Captions exist to make a video’s audio accessible to audiences with hearing needs. Thus, they include both dialog and background noise descriptions. They may be open (permanent) or closed (the viewer may turn them on or off.)

Closed Captions: Why They’re Important

We’re all familiar with the benefits of closed captions for deaf and hard of hearing audiences. But many people don’t realize that they offer significant benefits to for a multitude of other reasons.

For one, they help make your videos more visible. Closed captions allow search engines to index for SEO, which they can’t do if there is no text for them to crawl.

Captions also improve average watch time on your videos by engaging your audience. Not only this: if you are an educator or employer producing videos, the law may mandate you to close caption. Many studies have shown that besides improving accessibility, captions also improve learning outcomes. And a few other situations also require captioning. So, since they’re so important, how did modern closed captions come to be?

The Early Years: Silent Films and Intertitles

The first films didn’t have any original audio at all. Yet they often depicted scenes that in real life would include sound. To help audiences follow the story, filmmakers used an early form of subtitles. Today we call them intertitles because they came between scenes, unlike modern subtitles. The earliest films with these kinds of subtitles appeared in the late 1800s. Two filmmakers pioneered them: James Stuart Blackton and Robert W. Paul. In the early 1900s, Edwin W. Porter also became known for his creative titles in films such as College Chums. Early films were thus accessible to both deaf and hearing people. But by the 1920s, filmmakers were creating movies with sound. Titles fell out of use, appearing unnecessary and cumbersome.

Talking Films: The Development of Modern Subtitles

With the end of the silent era, deaf actor and producer Emerson Romero found himself out of a job. Since films were no longer accessible for deaf people, he tried to create subtitled versions. Unfortunately, his effort didn’t gain much traction at the time. But Romero’s films inspired the push for closed captioning a few decades later.

And subtitles didn’t completely die out. Filmmakers had translated silent films, and talking films continued to do the same. The market foreign audiences created seemed to make the effort worthwhile. Thanks to this, the movie industry continued to develop subtitling technology.

In the mid-1920s, a man named Herman G. Weinberg began to translate films into German using a Moviola. This machine allowed him to edit subtitles directly into a film while watching it. Over 60 years, he translated more than 300 movies, helping develop modern subtitles. His work paved the way for modern closed captions, by making the idea more realistic.

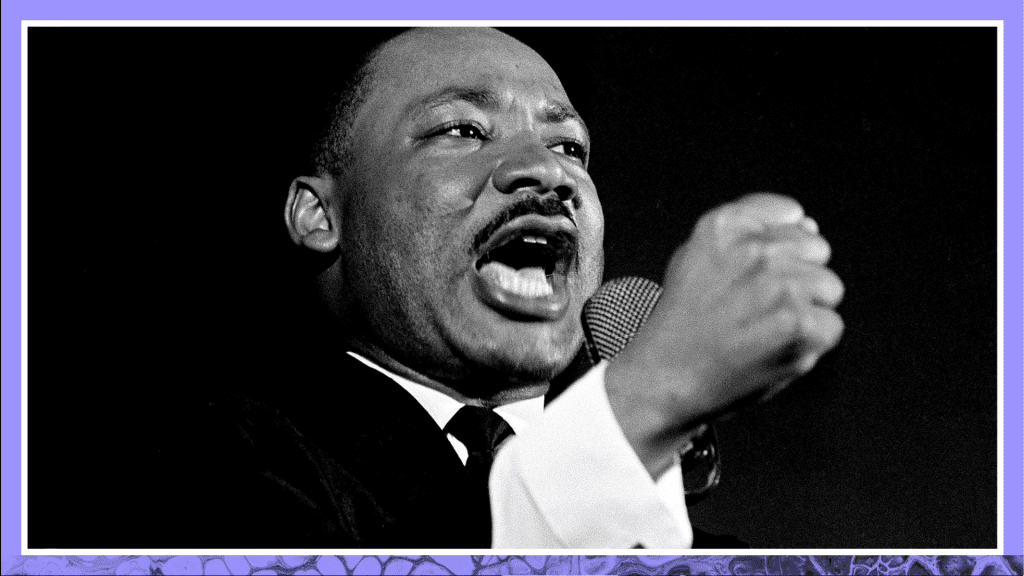

The Fight for Closed Captioning: The Analog Era

In 1950, Dr. Edmund Boatner and Clarence O’Connor founded Captioned Films for the Deaf, Inc. The program started out private, backed by the Junior League of Hartford, Connecticut. But the two superintendents at schools for the deaf knew they could do more. In 1933, the government had backed a talking books lending program for the blind. So Boatner and O’Connor set their sights on the same type of lending program for captioned films. That dream became a reality by 1958 when the Captioned Films program began. It first existed to allow deaf people to enjoy entertainment films. But soon, the effort expanded to make educational videos accessible too.

Unfortunately, television programs remained out of reach for deaf audiences. Still, a precedent had been set. In 1971, a special television set showed a captioned version of The Mod Squad. These kinds of captions were not available for most people, sadly. Then, in 1972, PBS and ABC pioneered two open-captioned programs: The French Chef by Julia Child, and ABC’s 6:30 evening newscast.

The Battle Won: Captions Become Commonplace

Little by little, the trend began to catch on. The government started funding research into better captioning technology in 1973. By 1976, PBS was working on a way to edit captions directly into television broadcasts. A box became available to decode those captions on regular televisions in 1980.

For early captions, specially-trained court reporters would write the captions for a program. Then, the special decoder box would allow viewers to see them on their screen. By the time the 90’s rolled around, captioned programs were becoming commonplace. An embedded chip was even invented. This meant televisions themselves could decode captions for viewers.

Deaf Americans received new support in 1990 with the Americans with Disabilities Act. It protected disabled people against certain types of discrimination. Finally, the 1996 Telecommunications Act won a decisive victory for accessible captioning. This law required all televisions to be equipped to decode captions. At the same time, captioning technology was becoming digital.

Captions in the Digital Age and Artificial Intelligence

By 2012, viewers were increasingly watching videos on the internet, not just in theaters or on television. So the FCC required that the technology to decode captions be built into electronics. That’s why closed captioning is so widely available today on our mobile devices and laptops. And the FCC has continued to update its requirements as technology keeps evolving.

The technology itself has also changed since the 20th century. In the analog age, captions were hard-coded. Consumers could turn them on or off, but not decide their size, background, or color. Now, digital closed captioning allows viewers to customize the style. This makes them even more useful for viewers that may also have vision impairments.

Up until recently, the only way to get good-quality captions was to have them transcribed by hand. Automatically-generated captions have been notorious for bad transcriptions. This has made it harder for deaf and hard-of-hearing viewers to access mainstream content.

But digital technology is moving at warp speed these days. There are many exciting new advances in artificial intelligence. New technology has created more accurate automatic speech-to-text transcription. This is saving video producers time in creating transcripts. Even more exciting, inaccurate auto-generated captions will soon be history. Recent advancements have made it possible to create accurate real-time caption generation.

Once, a deaf film producer was forced to hand-splice subtitles into films for the deaf and hard-of-hearing. His work was hardly noticed. But we’ve come a long way. If he knew how wildly his dream has come true, he would surely rejoice.