Rev Blog

Featured Posts

Most Recent

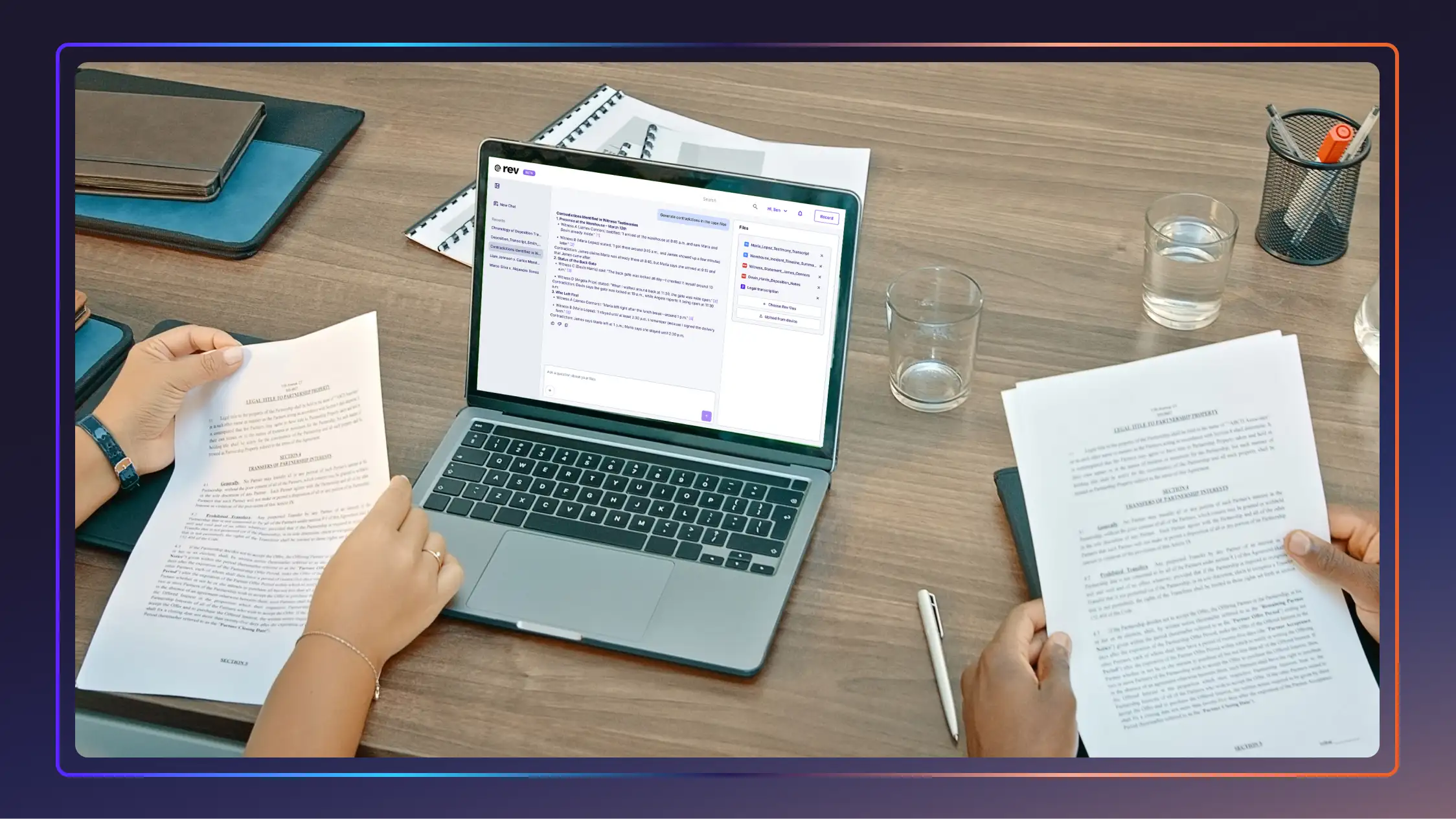

Top Legal AI Software Worth The Investment

Raise your firm’s productivity and maximize billable hours with these top 9 legal AI software companies that are worth the investment.

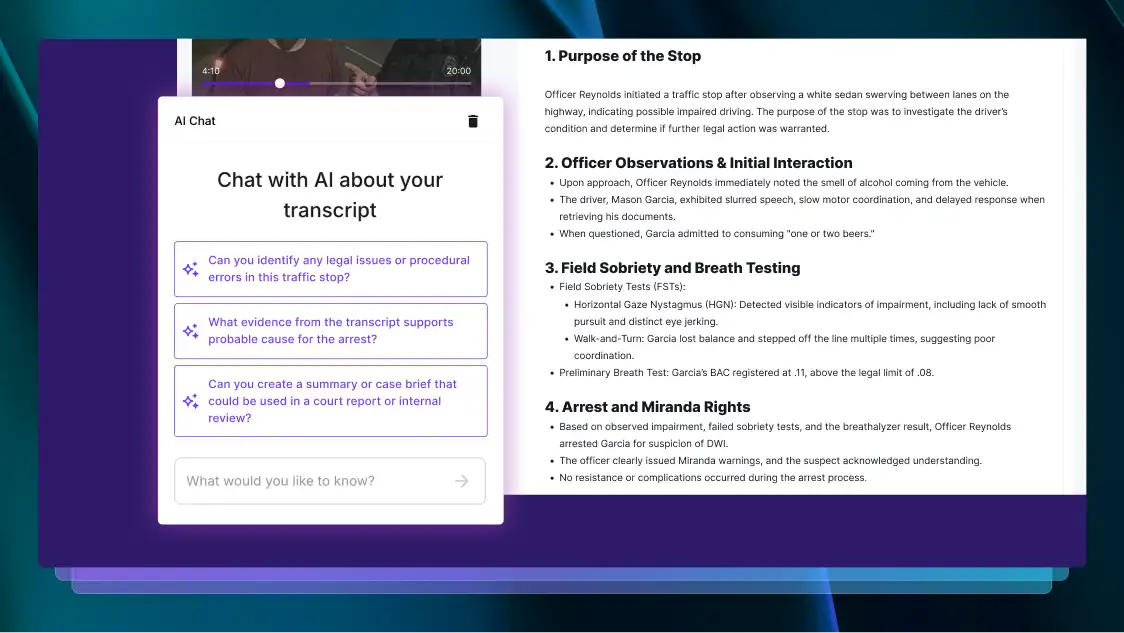

5 Challenges For Law Enforcement + Their Tech Solutions

Click to read how Rev breaks down five critical challenges facing modern policing and explores how law enforcement technology can provide real solutions.

An Expert Guide to Automatic Speech Recognition Technology

Understand automatic speech recognition (ASR), how speech recognition AI works, and why accuracy is critical in high-stakes industries.

Legal Subscription Services Better Than ChatGPT Wrappers

Legal subscription services can’t just be ChatGPT in shiny wrappers; they need to truly understand and integrate with what attorneys need. Here’s our guide to the best.

Reasonable Doubt: Definition, Burden, And Strategies

Understand what reasonable doubt means, the prosecution’s burden of proof, and how defense attorneys identify doubt in complex criminal trials.

Upgrade Police Evidence Management With Technology

Inefficient police evidence management isn't just a workflow issue—it's a barrier to justice. Luckily, technology is here to help. Click to learn more.

.webp)

Best Harvey AI Alternatives for Attorneys (+Accuracy Comps)

The best legal AI tools help attorneys address specific needs, optimize firm workflows, and improve client cases. See how these Harvey AI competitors compare.

Legal Subscription Services Better Than ChatGPT Wrappers

Legal subscription services can’t just be ChatGPT in shiny wrappers; they need to truly understand and integrate with what attorneys need. Here’s our guide to the best.

42 Criminal Justice Statistics for the United States

The American criminal justice system is vast and ever-changing, with technology and reforms being introduced every day. Let’s look at some key criminal justice data.

58+ Chatbot Statistics That Demonstrate The Future of AI

The popularity of AI chatbots for businesses and beyond doesn’t seem to be slowing down. Let’s look at some statistics that cover the popularity, use cases, and future of AI chatbots.

60% of Americans Get Their Legal Knowledge From Media Consumption

A new survey reveals what Americans think lawyers do, spotlighting the growing need for solutions that close the gap between legal reality and public perception.

4 in 5 Legal Professionals Are Burned Out: Can AI Be the Lifeline?

New report: 4 in 5 lawyers experience burnout. Learn how overwhelming workloads lead to stress and how AI is helping legal professionals find balance.

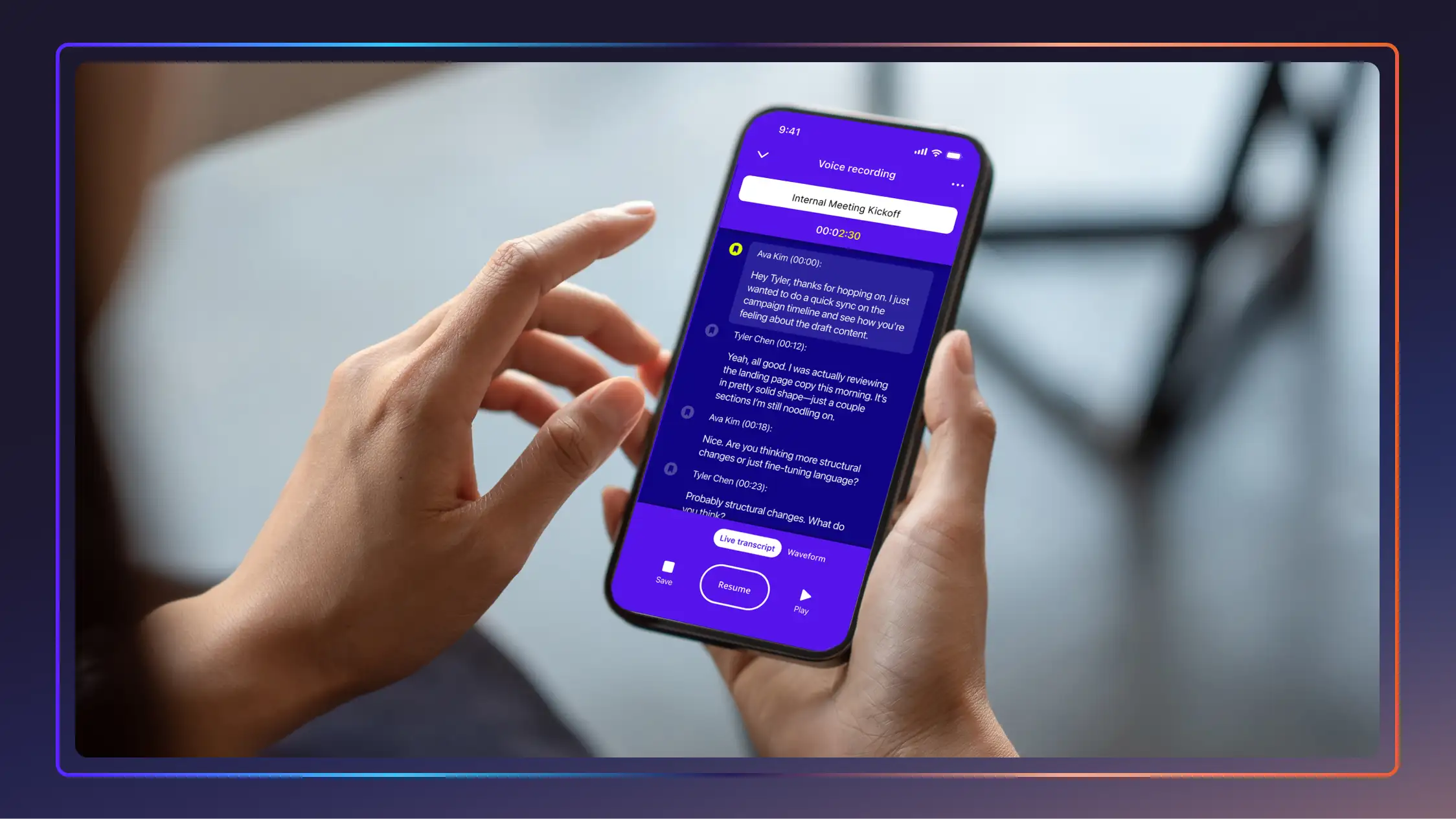

How to Record Audio on an iPhone in Three Different Ways

Master iPhone audio recording with our complete guide. Learn to use Voice Memos, Rev's recorder app, and online tools—plus how to get your audio transcribed.

Transcript to Trial: Leveraging Deposition Technology

Discover how firms are saving 12 hours per week and per attorney by switching to deposition technology with this whitepaper from Rev. Click to download.

Building a Speech Recognition System vs. Buying or Using an API

Pros and cons of building your own speech recognition engine or system vs using a pre built service or API that converts speech to text

Guide to Speech Recognition in Python with Speech-To-Text API

Learn how to add speech recognition to applications, software, and more using Python and our Speech to text API

Minneapolis Mayor on ICE Drawdown

Minneapolis Mayor Jacob Frey addresses the end of Operation Metro Surge. Read the transcript here.

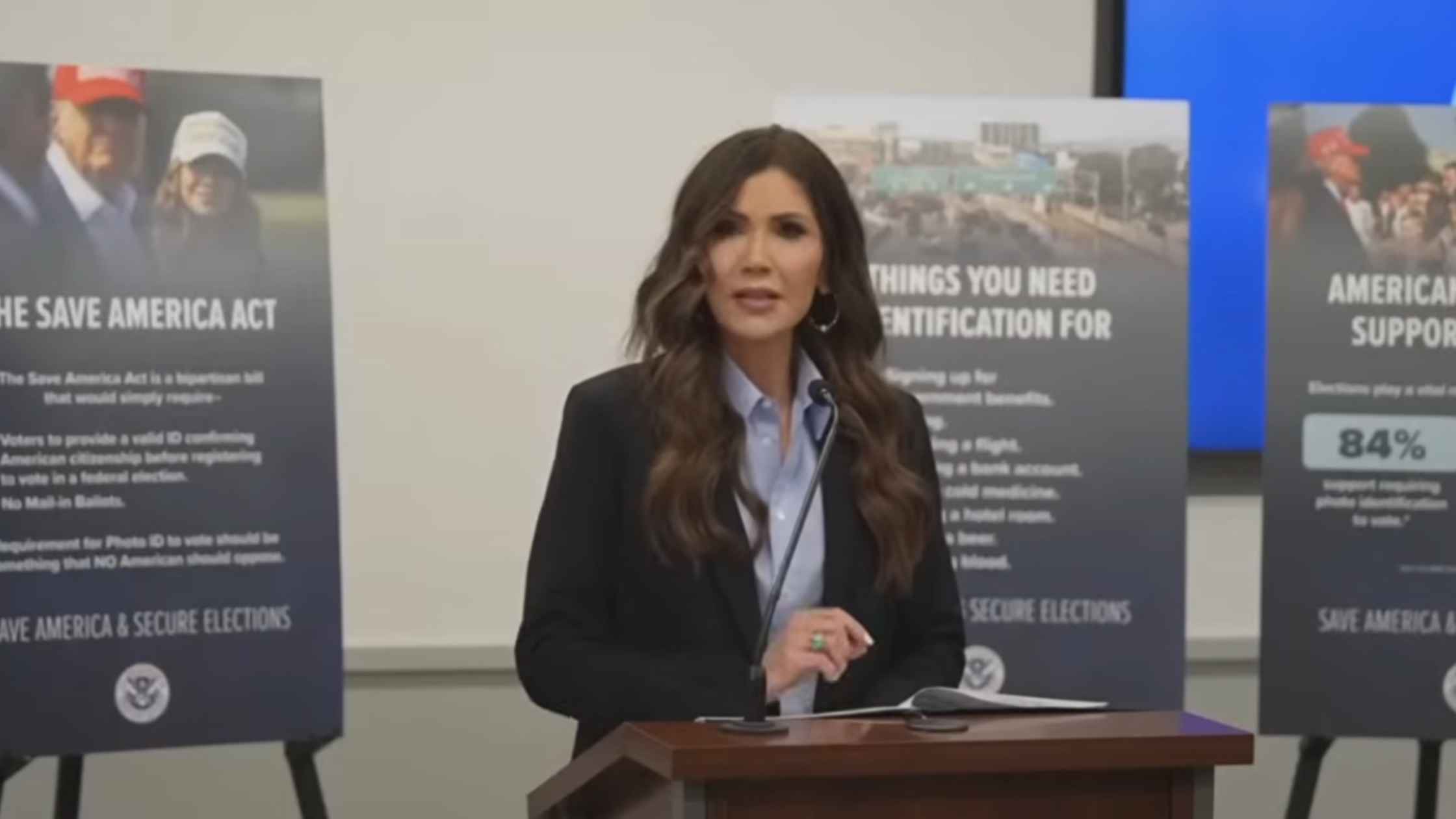

Noem on Elections

DHS Secretary Kristi Noem holds a news conference on election security ahead of the 2026 midterms. Read the transcript here.

Operation Metro Surge Ends

Border Czar Tom Homan announces the end of the federal immigration enforcement operation in Minnesota. Read the transcript here.

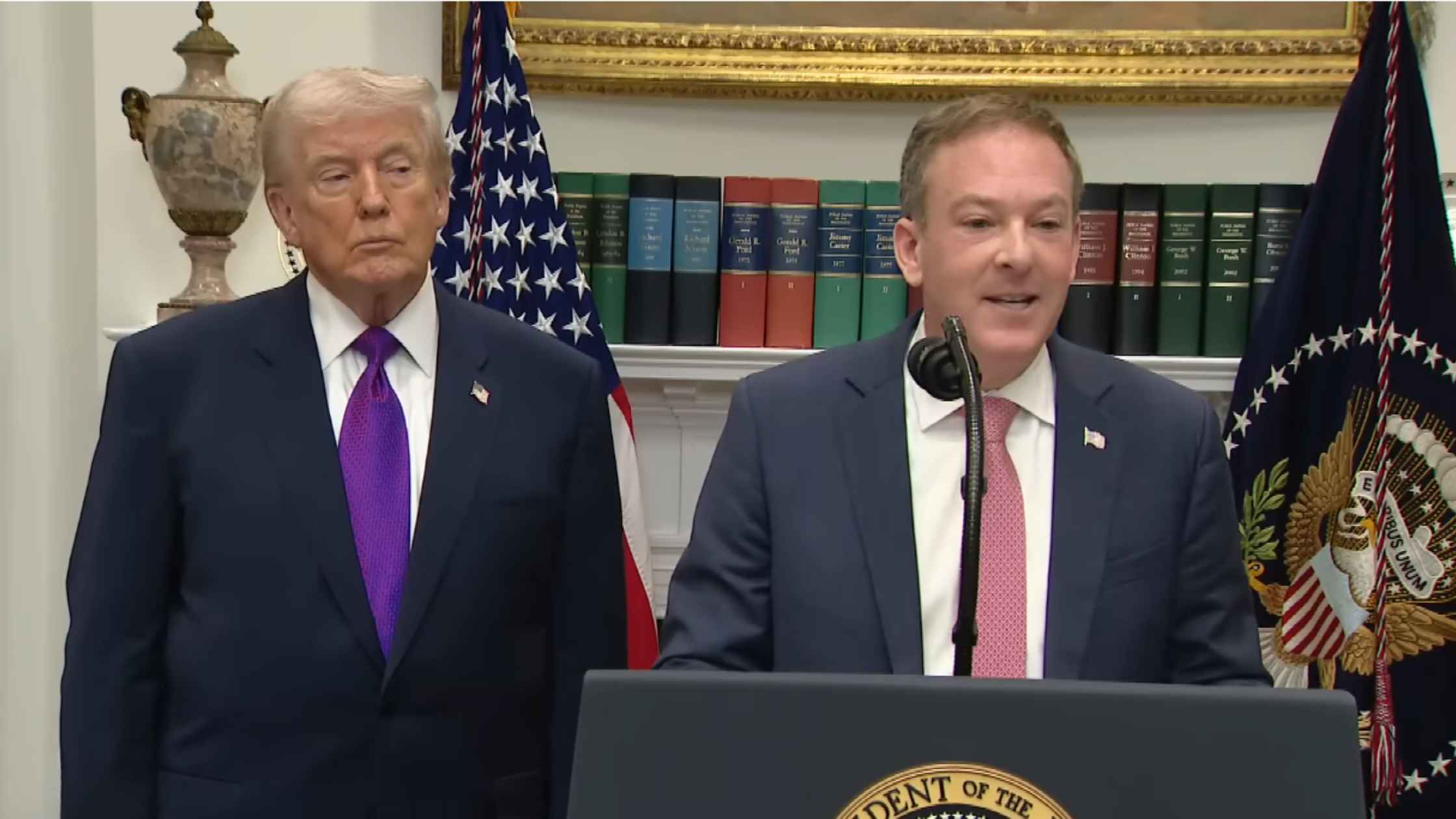

EPA Deregulation Announcement

Donald Trump and Lee Zeldin announce the end of the scientific basis for U.S. action on climate change. Read the transcript here.

Bondi Testimony Part Two

Pam Bondi appears at the House Judiciary Committee hearing on Justice Department oversight part two. Read the transcript here.

Bondi Testimony Part One

Pam Bondi appears at the House Judiciary Committee hearing on Justice Department oversight part one. Read the transcript here.

Subscribe to The Rev Blog

Sign up to get Rev content delivered straight to your inbox.

.webp)